The Macro: The Feedback Loop Is Broken and Everyone Knows It

Here’s the thing about customer feedback tools: there are a lot of them, and most of them are solving the wrong half of the problem. They make it easy to collect feedback. They do almost nothing about the quality of that feedback.

Product teams are drowning in tickets that say “the button doesn’t work” with no browser info, no steps to reproduce, no context. You send a follow-up. The user has moved on. Three days pass. Nothing gets fixed because nobody actually knows what broke.

The customer success software market is genuinely large. Multiple research sources peg it somewhere between $1.86 billion and $2.45 billion in 2025, with projections pointing toward $9 billion or more by 2032. That’s a lot of money chasing a problem that still feels extremely unsolved at the ground level, which, look, should tell you something.

The players in this space tend to cluster around dashboards, health scores, and automated outreach. Think Gainsight, Totango, ChurnZero. They’re built for customer success managers who want to see account risk at a glance. They are not built for the moment a confused user is staring at a broken checkout page at 11pm and just needs to show someone what they’re seeing.

That gap is real. I’ve watched product managers I respect spend hours reconstructing bug reports from five-word Slack messages. Helply is betting on AI-assisted support agents to cut that back-and-forth. Others are attacking onboarding, like Obi, which is trying to use voice AI to stop users from abandoning before they even get started. Woise is going after the feedback submission moment itself, which is a narrower bet, but maybe a smarter one.

The Micro: Record Your Screen, Talk Into the Void, Actually Get Understood

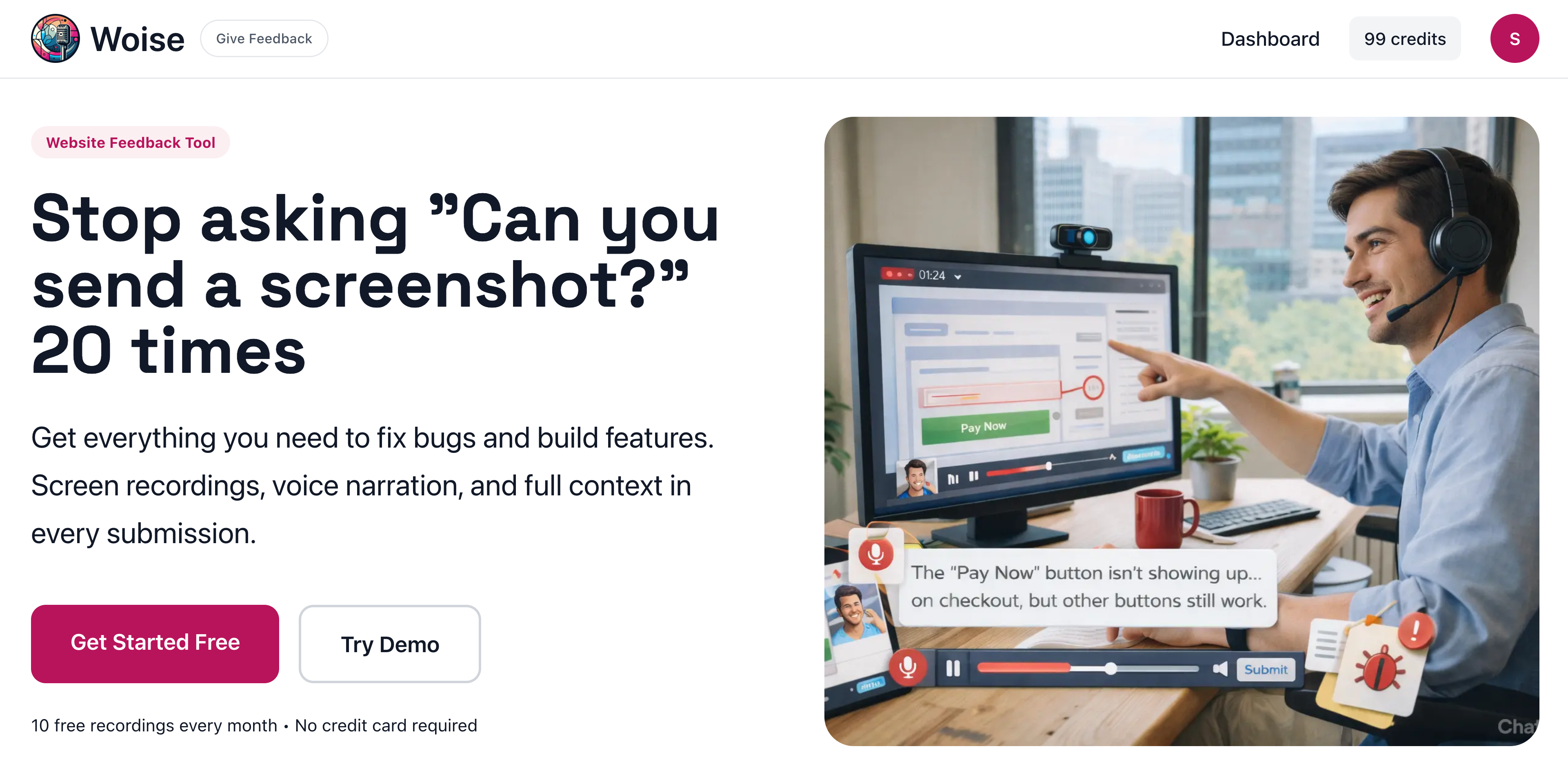

Woise is a website feedback widget. You embed it, your users click it, and instead of typing a paragraph they’ll never finish, they record their screen and narrate what they’re seeing out loud. The AI transcribes the audio. You get a video, a text summary, and automatic browser and device detection. That last part matters more than it sounds.

There are three capture modes. Screen recording plus voice is the flagship, and according to the site, the most popular. There’s also voice-only with screenshots, where users can take up to five stills while narrating, good for feature requests or longer explanations. Presumably there’s a third mode, though the site content cuts off before fully describing it.

The AI transcription piece is the part I find genuinely interesting. The insight is simple: people don’t hate giving feedback, they hate writing it. If you let someone talk for thirty seconds instead of composing three paragraphs, you get more detail, more emotion, and more context. The transcript gives you something skimmable. The video gives you the full picture. That combination is actually useful.

It launched with solid traction on Product Hunt, landing at daily rank eight.

The free tier is ten recordings per month, no credit card required. That’s a reasonable way to get an engineering team or a solo founder to actually try it on a real project before committing to anything.

I’d be curious how the recordings are stored and for how long. I’d also want to know whether there’s any way to tag or triage submissions inside the tool, or whether it just dumps everything into a list and you figure it out. For small teams that’s fine. For anyone with real volume, it could get messy fast.

The Verdict

Woise is not overhyped. It’s actually underselling itself a little. The positioning is “stop asking for screenshots” but the real value is structured, searchable, high-context feedback that doesn’t require users to be articulate writers. That’s a more interesting product than the homepage quite communicates.

The risk is that it lives in a crowded neighborhood. Loom exists. Jam exists. UserBack exists. Every one of those tools lets users record their screen. Woise’s differentiation is the voice-to-text layer plus the widget-first positioning, but I’d want to see how they hold that ground when Loom adds a feedback widget or when an existing bug tracker bolts on screen recording.

Here’s the thing about 30, 60, 90 days: the question isn’t whether someone will try it. The free tier will get people in the door. The question is whether the transcripts are actually good enough that product managers start treating them as primary input rather than a nice supplement. If the AI summaries are sharp and the integration story gets stronger, this becomes a real habit. If the transcripts are mediocre, people keep skimming them once and going back to Slack.

I’d want to see where they sit on integrations. Jira, Linear, GitHub. That’s the moat, or the absence of one. SaaS tools that solve one problem well but require manual work to connect to everything else tend to plateau fast.

The idea is good. I just want to see the execution keep up.