The Macro: Analytics Has a Bot-Shaped Blind Spot

The analytics market is enormous. Depending on which research firm you believe, it sits somewhere between $64 billion and $91 billion today, with projections pushing $300-500 billion by the early 2030s. And somehow, through all of that growth, the industry has largely looked away from the fact that a significant portion of the traffic being measured isn’t human. It’s bots. Increasingly, it’s AI bots.

GA4 is what most of the web runs on for free analytics. It’s client-side, JavaScript-dependent, and built from the ground up to count human sessions happening inside a browser. That architecture made sense in 2012. It makes considerably less sense now that ChatGPT, Gemini, and Claude are all sending crawlers across the web to train models, answer questions, and serve your content to users who never actually visit your site. None of that activity shows up in GA4. The architecture physically cannot capture it.

This isn’t a niche concern for paranoid webmasters.

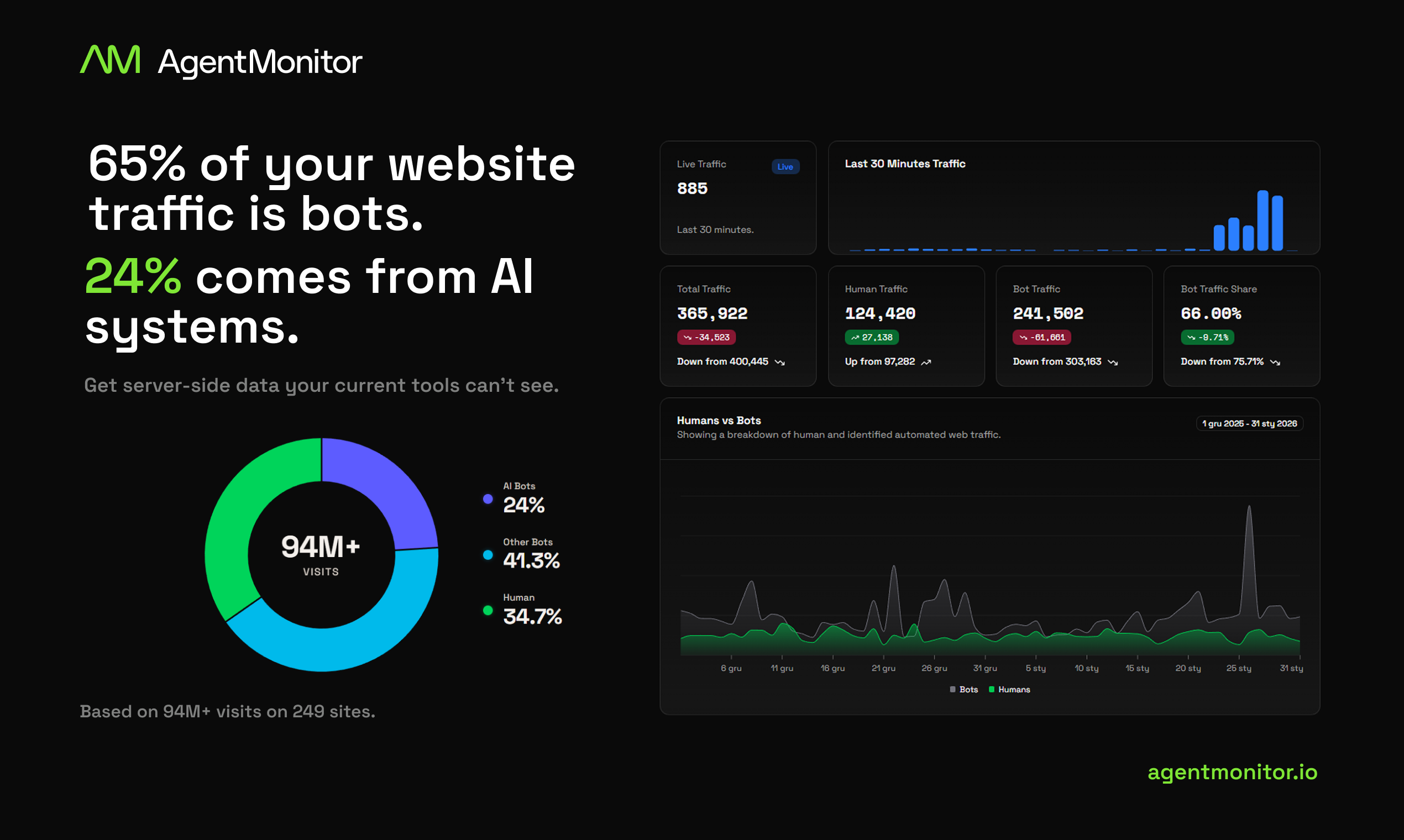

If Agent Monitor’s data from their own install base holds up, 65% of traffic across 94 million visits on 249 sites was bot traffic. That means the average site owner is working with a fundamentally distorted picture of who their audience actually is. SEO professionals are in a particularly uncomfortable position. They’re optimizing for visibility inside AI assistants they have no way to measure, using tools that were designed for a web that no longer quite exists.

Enterprise bot detection exists. Cloudflare’s Bot Management, Akamai’s Bot Manager. But those products are security tools first, priced for security budgets. The independent publisher or scrappy SEO shop has no real option here. That gap is genuine, and it keeps getting wider.

The Micro: Server-Side Honesty in a Client-Side World

Agent Monitor’s core technical argument is simple and, I think, correct. If you want to see bots, you have to look at server-side signals. Bots don’t execute JavaScript. They don’t fire your GA4 tag. They don’t interact with your cookie consent modal. They hit your server, take what they need, and leave. The only place you can reliably observe that behavior is in your server logs or through a server-side integration, which is exactly where Agent Monitor sits.

Integration works through CloudShare, a CMS plugin, or an NPM package. The two-week free trial skips the credit card requirement upfront, which is a smart call for a tool asking you to touch server configuration. Once connected, reports are reportedly available immediately, no manual configuration or ongoing tuning required.

The output includes bot profiles, per-bot traffic rankings, breakdowns by specific AI crawler (GPTBot, Gemini’s crawler, ClaudeBot, and others), and global benchmarks drawn from their aggregate install base. That last piece is actually the most interesting thing here. Knowing that 65% bot traffic is roughly normal for a site in your category, or that you’re getting hit by AI crawlers at three times the category average, is context no single-site tool can give you. It requires network data. That’s a real differentiator if the install base keeps growing.

It got solid traction on launch day. The 94 million visits across 249 sites before launch suggests this wasn’t vaporware going into it.

The tool was built by an SEO agency solving their own problem first. I’d want to know whether that produces sharp product instincts or a product that’s quietly too specific to their use case to generalize well. Still an open question. Pricing structure beyond the trial period is also unclear, and I’d want to understand how the classification algorithm handles novel crawlers that haven’t been identified yet.

The Verdict

Agent Monitor is solving a real problem. Most web analytics vendors are either ignoring the bot visibility gap or quietly benefiting from leaving it alone, since inflated traffic numbers are flattering until a client starts asking hard questions. The server-side approach is technically sound. The benchmark data is a genuine differentiator, not a marketing feature.

I think this works well for SEO agencies, independent publishers, and site owners who already suspect their analytics are lying to them and want proof. I’m more skeptical that it breaks through with general-purpose marketers who don’t already feel the pain. The people most likely to care are the people who already know what GPTBot is.

The path to success is fairly clear. Sign up enough sites quickly enough that the benchmark data becomes statistically meaningful, then find a pricing tier that fits the independent publisher or mid-size SEO shop, not just the agency with a real budget. The product’s long-term value depends almost entirely on whether that benchmark layer becomes something practitioners actually cite when making decisions.

The risk worth taking seriously is Cloudflare. They have the infrastructure, they’ve shown genuine appetite for moving into analytics-adjacent territory, and for them this would be a feature addition, not a product build. That’s not a distant hypothetical.

At 30 days, I want to see what retention looks like after the trial. At 90 days, I want to know whether the bot classification is keeping pace with how fast AI crawlers are actually multiplying.

The product barely hyped itself at all. That’s either quiet confidence or a real marketing problem. Probably worth finding out which before Cloudflare makes the question irrelevant.