The Macro: The Form Is a Design Failure We Just Stopped Noticing

Somewhere between the invention of the web form and the current moment where AI can hold a coherent conversation, we collectively decided that dropdowns and radio buttons were fine. They are not fine. Completion rates on traditional surveys are notoriously bad, and dropout spikes the moment a form feels long. It always feels long. Grids of questions feel like homework because they basically are.

Voice and conversational interfaces have been circling this problem for years without a clean landing. Typeform made forms feel less terrible through better UX. Tools like SurveyMonkey’s conversational mode and Voiceform have taken real runs at it. Deepgram, whose voice API Kollect’s maker reportedly used to build the product, made low-latency transcription accessible to indie developers in a way that simply wasn’t possible three or four years ago. The infrastructure got cheap enough that one developer can now ship something that would’ve required a funded team in 2020.

The open-source services market is also in a prolonged growth moment. Multiple research firms peg it at roughly 15 to 17% CAGR through the late 2020s and into the 2030s, with market size estimates ranging from around $18 billion to over $35 billion. The spread tells you something about how loosely “open source services” gets defined, but the direction is consistent. The self-hostable, privacy-first positioning Kollect leans into isn’t just ideological at this point. It’s a real procurement consideration, especially in Europe and in enterprise contexts where data residency matters.

Building open-source-first is a go-to-market strategy now. Not just a philosophical stance.

The real question is whether voice-first surveys are a vitamin or a painkiller. The completion rate argument says painkiller. But we’ve been told that before.

The Micro: What Kollect Actually Does (and the Bets It’s Making)

Kollect’s core mechanic is simple. Instead of presenting a user with a static form, it starts a voice conversation. The AI listens to spoken responses, adapts follow-up questions based on what it hears, and guides the respondent through the survey the way a good interviewer would. The site claims up to 3x better completion rates than traditional forms. That’s a number that needs more than a landing page to validate, but the directional logic isn’t crazy. Talking is lower friction than typing for most people in most situations.

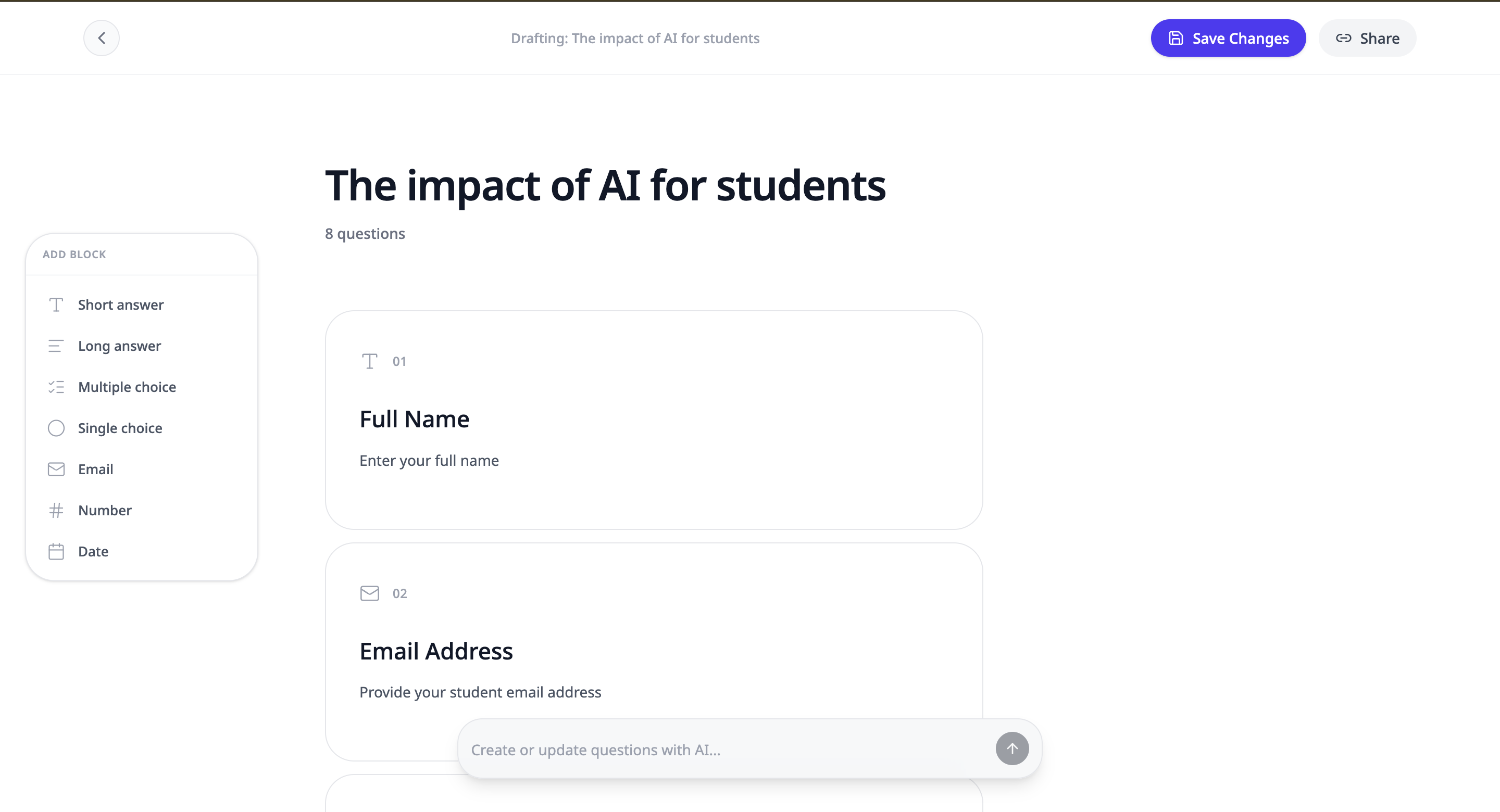

On the builder side, you can drag and drop standard block types, short answer, multiple choice, email, date, number, or describe what you want in plain language and let the AI generate the structure. That second path is the more interesting one.

If the AI form generation works reliably, it collapses the distance between “I have a research question” and “I have a deployed survey” by a lot. That’s the real product bet underneath the voice interface.

The technical stack is TypeScript, which is a sensible choice for a project that wants external contributors. Type safety makes open-source codebases dramatically easier to onboard into. The GitHub repo is public under MIT license, and the product is self-hostable on your own server, AWS, GCP, or Azure. A managed cloud option exists for people who don’t want to run their own stack, with the caveat that it’s currently a “limited demo.”

It got solid traction on launch day, which suggests the concept resonates with developers and early-adopter product people. Thirteen comments is thin, though. That’s a community that looked, nodded, and mostly moved on. Engagement depth matters for gauging genuine enthusiasm versus passive interest, and right now the ratio doesn’t scream conviction.

The Deepgram dependency for voice processing is worth thinking about. Not a knock on Deepgram, it’s solid infrastructure, but it means there’s a cost structure baked into the voice feature that self-hosters need to account for before committing.

The Verdict

Kollect is a well-reasoned bet on two things that are probably both true. Forms are genuinely bad UX, and voice interfaces have crossed a usability threshold where they’re no longer embarrassing to deploy. The open-source, self-hostable positioning is smart differentiation in a market where data privacy concerns are real and, depending on your geography, increasingly regulatory.

Here’s what I’d want to know before getting genuinely excited. Does the adaptive AI produce better-quality responses, or just more responses? Completion rate is a metric. Data quality is the actual goal. The 3x claim needs a source, not a bullet point. I’d also want to see the managed cloud mature. The “limited demo” framing tells me production use cases are going to hit rough edges.

At 30 days, I’m watching GitHub stars and whether any non-trivial organizations actually self-host it. At 60 days, the question is whether AI form generation holds up across diverse use cases or works great for customer feedback and falls apart for anything more structured. At 90 days, I want to know if a community is forming around the repo, or if this is a solo project that launched well and then plateaued.

I think this is probably a genuinely useful tool for developers, researchers, and small teams doing user research who are comfortable with some DIY setup. I don’t think it’s ready for organizations that need production-grade reliability and don’t have technical resources to troubleshoot it themselves. The ceiling is real. So is the execution risk.