The Macro: AI Coding Workflows Are Messy and Everyone Knows It

The engineering software market sits somewhere between $65 and $73 billion for 2024–2025, depending on which research source you trust. Projections have it doubling or tripling by the mid-2030s. That’s a lot of capital chasing a question nobody has cleanly answered yet: how do you make AI coding agents actually useful in production, not just in a demo someone recorded at 2am?

The crowding in AI coding tools hasn’t produced clarity. It’s produced noise.

GitHub Copilot is the path of least resistance. Cursor has a devoted following among developers who want more control over what’s happening. Claude’s code-native features, Devin, Aider, a dozen others. Each one has its own opinion about what “agentic” means. What they share is an assumption that individual capability is the thing worth optimizing. The workflows wrapping these tools are mostly improvised. Duct tape. Vibes.

Here’s the pattern that actually matters: model capability improved faster than anyone expected, and the bottleneck quietly moved. It’s no longer “can the model write code.” It’s “can the model write the right code, in the right order, without wrecking what you built yesterday.” That’s a process problem. And it compounds badly. The more autonomy you give an agent, the more expensive its mistakes get.

The job market context matters here too. One well-sourced industry newsletter put engineering headcount at roughly 22% below its early 2022 peak, with no clear recovery signal. Smaller teams are being asked to ship more. A structured agentic workflow that keeps an AI from confidently going sideways isn’t an abstract nice-to-have. It’s an operational need that a lot of teams are feeling right now.

The Micro: Seven Slash Commands and a Theory of How Software Gets Built

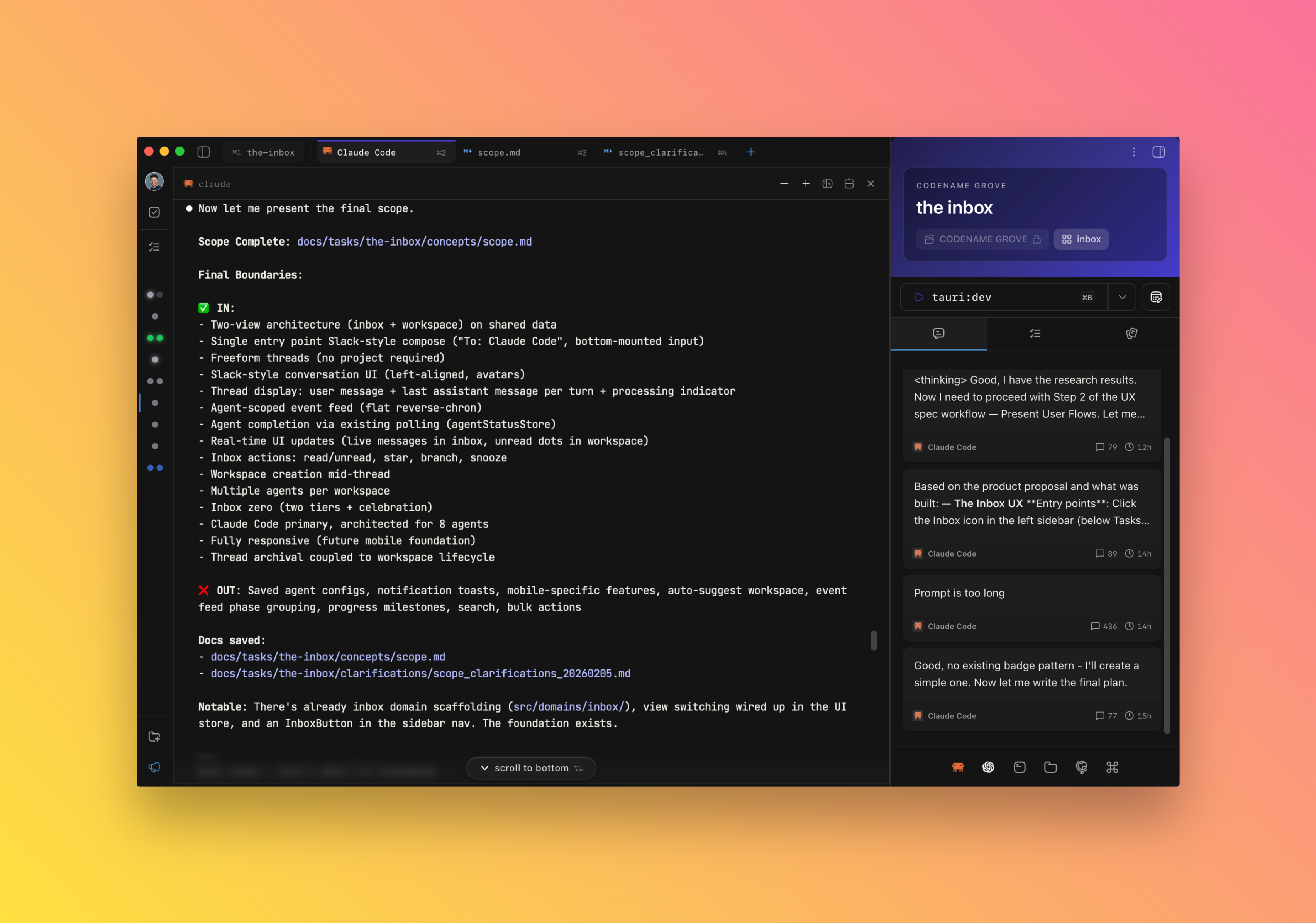

SPECTRE is an open-source agentic coding workflow hosted on GitHub under the Codename-Inc organization. The premise is simple enough to explain in one sentence: instead of throwing a prompt at an AI agent and hoping for coherent output, you walk it through a defined seven-stage pipeline. /Scope, /Plan, /Execute, /Clean, /Test, /Rebase, /Evaluate. Each command is a discrete phase. The whole structure is designed to give agents the kind of scaffolding that stops them from producing confident, plausible-sounding disasters.

MIT licensed. That detail matters to me.

This isn’t a SaaS wrapper with a pricing page you have to decode. It’s a framework you can read, fork, and change. The repo had 83 stars and 10 forks at launch, which is modest. It’s also a project asking developers to rethink their entire agentic workflow rather than just install something, so modest is probably the realistic baseline.

The positioning targets “product builders” rather than pure engineers. That framing is deliberate and mostly accurate. The seven stages map cleanly onto how a product-minded developer actually thinks about shipping: scope it, plan it, build it, clean it, test it, reconcile it with existing state, evaluate. It’s not trying to replace judgment. It’s trying to structure the execution of judgment you’ve already made.

It got solid traction on launch day, which suggests real interest rather than just a coordinated push.

The clearest gap in the repo is competitive differentiation. Aider has structured session management. A disciplined Cursor workflow with good prompt templates covers some of this ground too. What SPECTRE does that those approaches don’t, specifically and demonstrably, is still a partially open question.

The Verdict

SPECTRE is making a specific bet: the problem with AI coding agents is process discipline, not raw capability. That bet is probably right. The harder problem is that “structured workflow” is genuinely difficult to distribute. It requires behavior change from developers who already have habits, strong tool preferences, and not much patience for friction that doesn’t immediately pay off.

I think this is probably useful for product-minded developers who are already frustrated by how quickly agentic sessions go sideways. It’s a harder sell for engineers who have already built something that works for them, even if what works for them is technically messier.

At 30 days, I’d watch the GitHub stars trajectory. 83 at launch is a starting point, not a signal. At 60 days, community contributions to the workflow stages would tell me something real. A fork that adds an eighth command, a substantive issue thread about /Rebase edge cases. That kind of activity separates actual adoption from curiosity. At 90 days, if there are no documented real-world project examples, SPECTRE risks becoming a framework people star and never open again.

What I’d want to know before fully endorsing it: does this work as well with Claude as with GPT-4o? Is model-agnostic a real claim or a theoretical one? And who at Codename-Inc is actually driving this, because the founder research was inconclusive enough that I’m genuinely uncertain.

The open-source call is correct. The concept is sound. Whether SPECTRE becomes infrastructure or a footnote comes down to whether the team can build a community around the methodology. Not just the code.