The Macro: The AI Code Review Land Grab Is Already Crowded

Here’s the thing about AI developer tooling in 2025: the category is simultaneously overflowing and underdelivering. You’ve got GitHub Copilot doing code suggestions, CodeRabbit doing PR reviews, Sourcegraph’s Cody doing… a lot of things, and a dozen smaller players all claiming they’ll make your pull requests less painful. The market data roughly confirms why everyone’s piling in — software engineering as a category sits around $65–73 billion in 2024–2025 and is tracking toward $205 billion by 2035, per Market Research Future. There’s real money moving through this space.

The irony is that the engineering job market itself has contracted sharply — engineering headcount is reportedly still about 22% smaller than it was in January 2022, according to The Pragmatic Engineer’s newsletter. That compression creates a specific kind of pressure: smaller teams doing more review work with less bandwidth, which is exactly the gap AI code review tools are trying to fill. Whether that’s an opportunity or just a pile of VC money chasing the same thesis depends on who you ask.

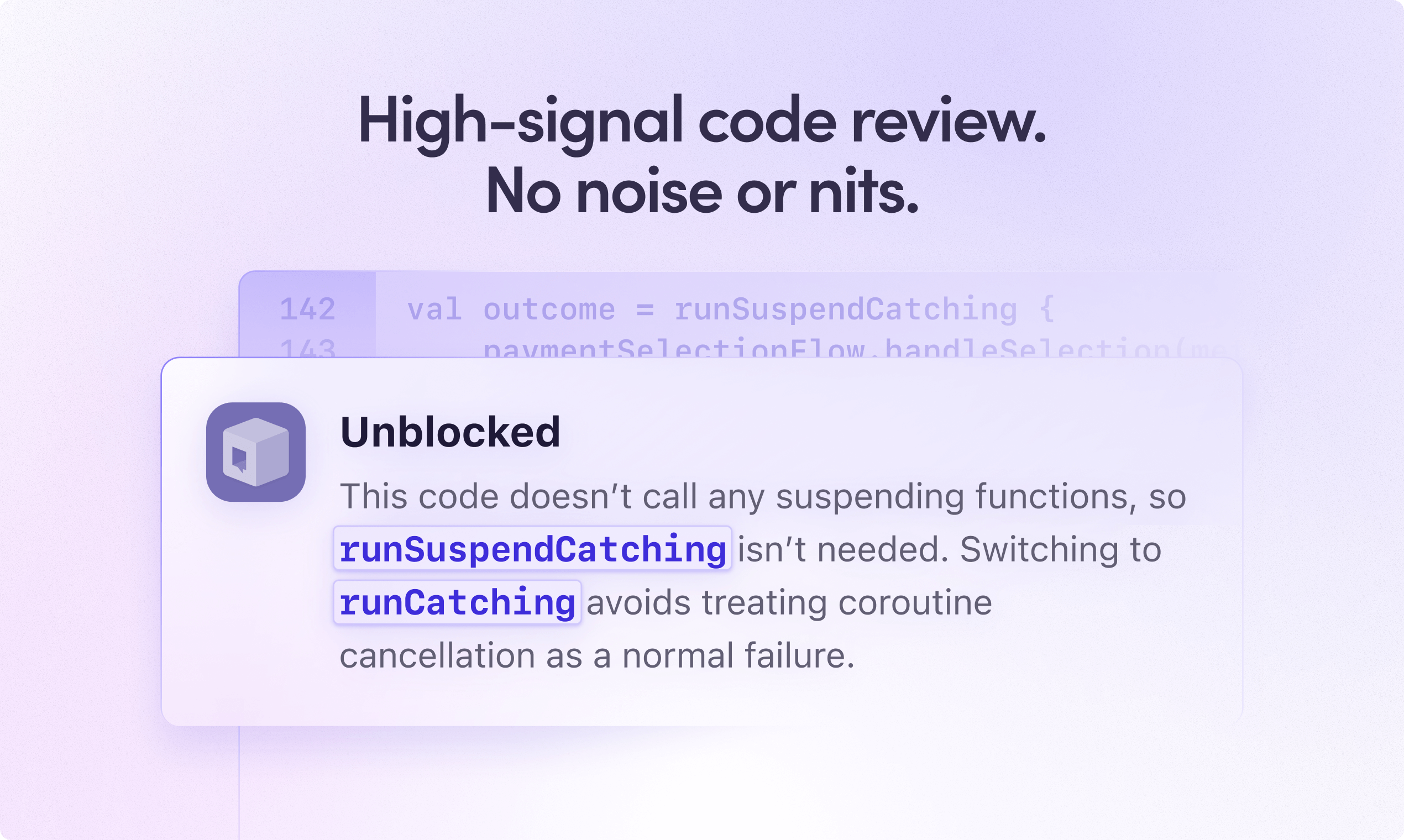

The actual product differentiation battle is happening around one question: does the AI understand your codebase, or is it just running a generic linter with a language model strapped on top? Most first-generation tools are closer to the latter — they see the diff, they leave comments, they occasionally flag something useful. The problem is that a diff stripped of context is a pretty thin slice of what a senior engineer would actually care about. Why was this pattern chosen? What did the team decide six months ago in that long Slack thread nobody can find? What does this PR break that the tests don’t cover? That’s the gap the better tools are now competing to close, and it’s a legitimately hard problem.

The Micro: It Read Your Jira Tickets (No, Really)

Unblocked Code Review’s core technical claim is that it pulls context from across your actual working environment — not just the repo, but Slack, Jira, PR history, docs — and uses that to inform what it flags. The practical implication: instead of a comment that says “this function is too long” (cool, thanks), you might get a comment that says “this conflicts with the pattern your team adopted in PR #847 based on the discussion in #eng-backend on March 12th” with a citation. That’s a meaningfully different artifact.

The citation model is worth noting specifically. Comments in AI review tools often suffer from the same credibility problem as confident-but-wrong search results — they sound authoritative but you can’t verify them quickly. Surfacing the source of a recommendation (and apparently Unblocked links back to those sources) addresses that problem at the product level rather than just hoping users trust the output.

On the launch itself: 172 upvotes and a #8 daily rank on Product Hunt is a decent showing — not a blowout, but respectable for developer tooling, which historically punches below its weight on PH because the audience skews toward indie makers rather than enterprise eng teams. The 15 comments is on the quieter side. The testimonials on the product site are from engineers at Clio, TravelPerk, Auditboard, HeyJobs, and Drata — recognizable names in the mid-market SaaS world, which suggests some real enterprise traction rather than just friendly beta users.

Dennis Pilarinos is listed as Founder & CEO (per LinkedIn). Beyond that, the research doesn’t give us much on team background, funding, or user numbers — so we won’t speculate. The 21-day free trial with no card required is a smart PLG move for a product that needs engineers to actually experience it before they’ll advocate for it internally.

The Verdict

The honest answer is: the architecture makes sense, and the frustration it’s targeting is real. Every engineer has been burned by an AI reviewer that left twelve comments about variable naming and missed the race condition. If Unblocked’s context-stitching actually works consistently — and that’s a meaningful if — it’s solving something that matters.

What would make this succeed at 30/60/90 days isn’t launch momentum; it’s retention after the trial. The first PR review that surfaces a genuinely non-obvious issue with a real citation is a conversion event. The tenth one that does it is a sticky product. The failure mode is that the context retrieval adds latency or noise of its own — pulling in too much irrelevant history and producing confident-sounding wrong answers with better footnotes.

Things we’d want to know before fully endorsing it: how does review quality hold up on larger, messier repos vs. cleaner mid-sized codebases? What’s the false-positive rate on the “high-signal” comments after a month of use? And — bluntly — what does the pricing look like past the trial, because that’s where enterprise PLG tools either convert or quietly get uninstalled.

Worth trying if you run a team that’s drowning in PR volume. Worth watching either way.